Adres

Polska, Warszawa

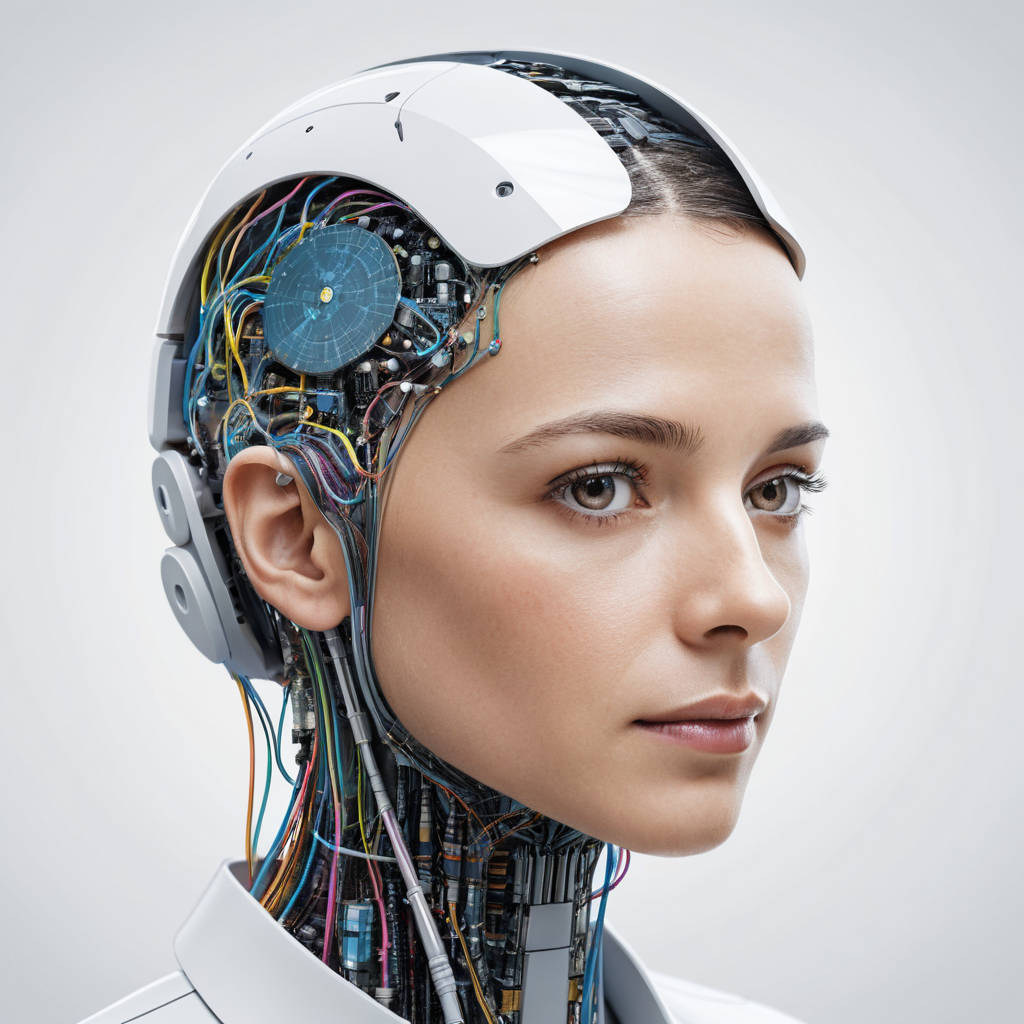

Artificial Intelligence (AI) is reshaping workflows, tools, and applications with remarkable speed. The past few years have witnessed groundbreaking developments that are revolutionizing industries across the globe. This week, we focus on some of the most significant advancements, including OpenAI’s latest ChatGPT app updates, innovative integration with desktop applications, and the rapidly expanding ecosystem of generative AI tools. These innovations have the potential to transform productivity and streamline workflows in various industries.

The technological leap that AI has taken is not only redefining how we approach tasks but also introducing a range of tools that are making everyday operations more efficient. Let’s explore some of the latest advancements and understand how they are impacting productivity, especially in generative AI.

OpenAI’s recent expansion of ChatGPT’s capabilities marks a pivotal shift in how AI interacts with day-to-day software applications. The introduction of the desktop app for Windows, along with seamless integration into applications such as VS Code and Terminal, is a major development. This update enables ChatGPT to pull context from these desktop environments, resulting in more intelligent interactions and an enhanced user experience. But why is this such a game-changer? Let’s delve deeper into its key features.

A primary feature that sets the new desktop ChatGPT apart is its ability to understand and process code directly from applications like VS Code. This functionality allows developers to leverage ChatGPT as a debugging assistant that is fully aware of the context. The AI’s understanding of surrounding code can provide insightful suggestions for problem-solving and scripting. This has proven particularly helpful for tasks like optimizing code, identifying bugs, or even generating specific snippets to streamline coding projects.

Currently, the integration is focused on code editors, with VS Code as a standout example. However, OpenAI plans to expand this functionality beyond code editors to include other applications that users interact with frequently. Imagine ChatGPT working directly with spreadsheet software to help analyze data or create visualizations based on user prompts, or integrating with email clients to draft responses more effectively. This vision of expansion points toward a future where AI is a true multitasking companion across all aspects of digital work.

Previously, the capabilities of ChatGPT were mostly limited to a browser environment, which required an active internet connection. Now, Windows users can take advantage of the new desktop app, giving them easier and quicker access to AI assistance. This means AI can become an integral part of their offline workflow, blurring the lines between local applications and cloud-based AI tools. The flexibility to access ChatGPT offline brings an element of convenience that wasn’t available previously, making it a more reliable tool for professionals on the move or in areas with limited connectivity.

The integration with Terminal further showcases how AI is evolving into a productivity tool for non-developers as well. Users who may not be well-versed in the intricacies of bash commands can now navigate directories or execute commands in Terminal with natural language instructions provided by ChatGPT. This capability lowers the barrier to entry for many individuals, allowing users to perform what were previously considered “expert-level” tasks, contributing to a broader adoption of technology and enhancing productivity at every skill level.

Generative AI tools are pushing the boundaries of how we interact with technology and automation. These tools are evolving rapidly, providing new ways to automate and enhance everyday productivity. Here, we explore a few of the most impactful tools that are currently transforming the landscape.

One of the most exciting generative tools recently released is Chatbase, a platform that connects Notion pages to chatbots, effectively transforming knowledge bases into dynamic interfaces. For companies and teams, this feature provides immense value in terms of managing internal documentation, drafting content, and automating workflows.

With Chatbase, internal documentation becomes a lot more accessible. Instead of manually searching for specific information, employees can query a chatbot to get answers instantly. This technology saves time and minimizes the frustration that often comes with navigating large volumes of information. Moreover, it offers a more conversational way to manage and interact with company databases, which can reduce onboarding time for new employees and improve efficiency across the organization.

Creating effective AI prompts is one of the keys to getting the best out of generative AI. However, crafting prompts that yield optimal results can be challenging, especially for those who are not experienced in AI interaction. Tools like “Sam the Prompt Creator” and Anthropic’s prompt enhancer aim to simplify this process by automatically generating or improving user inputs.

These tools are particularly beneficial for individuals who use AI for repetitive tasks or automations. By creating clear, effective prompts, users can optimize workflows without needing to manually intervene or adjust prompts continuously. Whether you’re automating customer support tasks or building creative content with AI, prompt improvement tools make the process significantly more efficient.

Another notable innovation in the realm of generative tools is “Quen 2.5 Coder,” an open-source code generation tool that brings a competitive edge to the landscape currently dominated by proprietary solutions like Claude 3.5. The unique aspect of Quen 2.5 is that it provides code generation with visualizations, which not only helps developers generate code faster but also enables a clearer understanding of the logic behind the generated content.

This feature is particularly important for novice programmers who may need additional help in understanding complex code snippets. The visualizations act as a learning tool, bridging the gap between code generation and knowledge transfer, ensuring that users not only get code but also understand how it works and why it is implemented a certain way.

Running large language models (LLMs) locally has always been a challenging prospect for individuals and smaller enterprises, largely due to the substantial hardware requirements. This week, a particularly exciting development caught attention—connecting multiple Mac Minis via Thunderbolt 5 to create a powerful, scalable LLM environment.

By utilizing several Mac Minis, users are able to run advanced models like Llama 70B locally. This has profound implications for privacy and cost control, as it eliminates the need to rely on third-party cloud services, thereby securing sensitive data. Here are some key advantages of this approach:

The entire setup costs approximately $8,800—a price point that significantly undercuts traditional GPU-powered alternatives that would be needed to handle similar workloads. This makes AI-powered supercomputing accessible to smaller companies and even individual enthusiasts, democratizing access to powerful LLM technology.

Another major advantage of this Mac Mini configuration is its scalability. By daisy-chaining devices, users can add computational power as their needs evolve, without the substantial initial investment that typically comes with deploying powerful GPUs. This modular setup approach provides flexibility that is particularly valuable for users whose AI needs may fluctuate.

While the Mac Mini-based solution offers impressive affordability and privacy, it’s important to recognize the limitations. Compared to traditional GPU-based supercomputing, this configuration still lacks the processing power to run the absolute largest models as efficiently as a state-of-the-art data center might. Nevertheless, for many businesses and personal projects, the performance is more than adequate and represents a feasible stepping-stone solution.

In recent times, the concept of agentic AI—that is, AI systems capable of autonomously executing multi-step workflows—has generated considerable excitement. From automating web interactions to handling complex back-office operations, agentic systems hold immense promise. However, despite significant advancements, practical implementations still fall short of expectations.

Multi-agent systems like Microsoft’s new agentic frameworks aim to handle various tasks, such as opening Excel files, analyzing content, and feeding data into other applications. While the idea is promising, real-world scenarios reveal numerous inconsistencies. For instance, many tasks that require conditional logic or involve external APIs continue to trip up these agents, making human intervention necessary more often than anticipated.

The complexity of these multi-agent systems also raises issues with transparency and accountability. When things go wrong in an automated multi-step process, identifying where the error occurred can be cumbersome. While the concept of agentic AI is certainly a glimpse into the future of automation, it’s clear that further research and development are needed to achieve the desired level of reliability and functionality.

The current limitations of agentic AI systems don’t take away from their potential. Given the right kind of iterative improvement and the implementation of feedback mechanisms, agentic systems could drastically change industries like customer service, logistics, and even finance. As these technologies mature, we can expect to see a gradual but undeniable shift towards increased AI autonomy, reducing the need for repetitive human input and supervision.

The advancements in AI tools and integrations in recent weeks underscore the growing versatility and influence of AI in everyday workflows. From the enhanced capabilities of ChatGPT in app-based environments to the scalable, cost-effective use of Mac Minis for running powerful language models, these innovations are paving the way for smarter, more efficient systems.

Generative AI tools like Chatbase and prompt enhancement technologies are pushing the boundaries of productivity, making once-difficult tasks achievable with minimal effort. However, challenges remain—particularly in the consistency and reliability of multi-agent AI systems—that must be addressed for broader, mainstream adoption.

These developments in AI integration provide a fascinating glimpse into the future, where technology not only assists us but seamlessly integrates into every facet of our professional and personal lives. We are at the precipice of a new era in AI—one characterized by more accessible, powerful, and intelligent tools that bring us closer to realizing the full potential of artificial intelligence.

The future looks promising, but the journey is only beginning. As AI continues to evolve, it will be crucial for both developers and users to adapt and learn, ensuring that we harness these technologies responsibly and to their fullest potential. The next chapter in AI’s story is waiting to be written—and we’re all invited to play a part.